Serverless SFTP in AWS

At Narrative Science we believe in giving our engineers the freedom to innovate. We do this through an annual 3-day hackathon and quarterly hack days. In addition, we have an Incubation Team that works on prototyping ideas not otherwise found on our roadmap.

In this post, I would like to showcase one of the projects that another engineer and myself were able to complete during our most recent annual hackathon. Specifically, we were able to take one of our most critical servers and turn it into a serverless system using Amazon Transfer, Lambda, and S3.

What were we replacing?

Some of our clients prefer to send and receive large amounts of data at one time. We facilitate this through the use of an SFTP server.

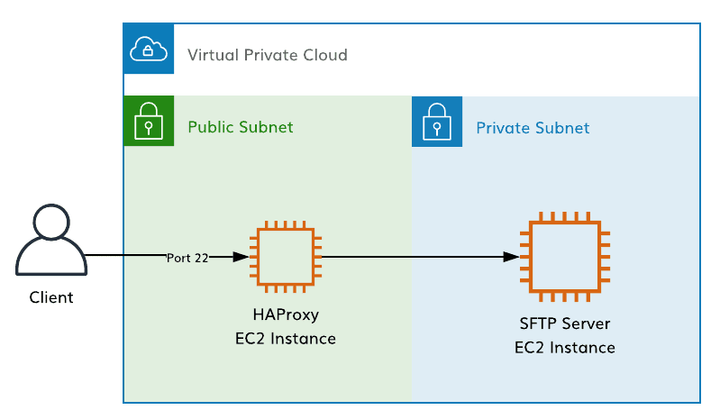

Below is a simple architecture overview:

The SFTP server needs to meet a handful of high-level requirements:

- Strict user isolation

- High availability

- Encryption of data in-flight and at rest

- Durability of a client’s data

- Ability to run incoming files through our Quill pipeline

Shortcomings

Being a SOC II (type 2) compliant business, we need to apply regular security updates to our infrastructure. This can be challenging with something that needs to be highly available and has persistent state. Our existing solution used VM’s (virtual machines) and moved around large EBS (elastic block store) volumes. Each time we needed to apply updates, we would need to orchestrate the transfer of that data to ensure none was lost. This costs us a fair amount of engineering time to maintain. On a monthly basis, we would need to spend roughly 6 hours doing these updates.

The SFTP server features a service (codename Watchdog) that runs incoming files through our Quill pipeline. It watches the file system and when files were dropped into specific directories, it tells Quill to what files to pickup for which project. Typically Quill will then place these stories back on the SFTP for delivery to the customer. Watchdog is one of the oldest pieces of software still running in production, it is not well understood by our engineers, and is still using Python 2.7, which will be end of life in early 2020. So at the very least, it would need to be upgraded to Python 3.x.

User management was always manual. There was no way to have client support teams administer the users of the system, costing us more engineering time. Non-technical employees were required to login to the SFTP machines using SSH and manage users on a Linux VM, which is error-prone.

Moving to serverless

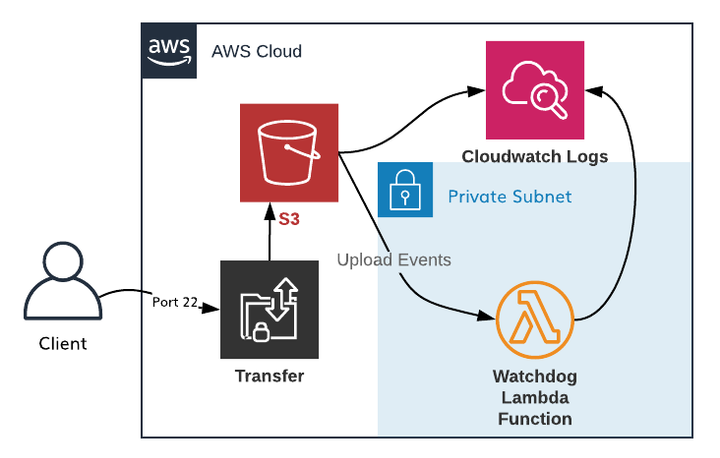

Amazon recently released their Transfer service; which is a 100 percent serverless SFTP solution backed by S3 and IAM. The only thing we would really need to customize is replicating the Watchdog service with Lambda.

Moving to a serverless model would mean we don’t have to spend engineering time doing security maintenance since Amazon would handle all of it.

How this meets our requirements

- Strict user isolation. This means that each user who logs in can exclusively see their own files. This can be handled by IAM policies. As part of their Transfer service, AWS added a few variables to IAM that allow us to do this.

- High availability is guaranteed by Amazon. We want to provide service to our customers at all times since we never know when they will want to hit us. This comes for free as part of moving to serverless.

- Data durability. It is critical to us that we never ever lose a client’s data, or have it become corrupted. This is also guaranteed by Amazon, as it is in S3.

- Encryption of client data at rest. This is also turn-key with S3 and we have used bucket encryption many times so this was super simple for us to just use.

- Watchdog: A service that watches for file uploads and runs those files through our Quill platform asynchronously. This can be replicated by triggering a Lambda function when objects are uploaded to S3 in a specific place.

A serverless Watchdog is great because it doesn’t “crash” ever. S3 Events can be fired ad nauseam at this Lambda and it will scale massively, while not costing us very much. It also logs directly to a Cloudwatch Log stream, meaning we can grant engineers access to see these logs, or even set up alerts when Watchdog fails.

As a bonus we are now able to completely reproduce this using Cloudformation. We could spin up as many of these servers as we need to. All we would need to do is bootstrap the users and their keys. If other products required using an SFTP server, we can easily create one in seconds.

Building in CloudFormation

We leveraged AWS SAM to build, package and deploy our SFTP template to CloudFormation. Below are the key resources and highlights from our template.

Creating the SFTP resource is very straight forward:

ServerlessSFTP:

Type: AWS::Transfer::Server

Properties:

EndpointType: VPC_ENDPOINT

EndpointDetails:

VpcEndpointId: !Ref ServerlessSFTPVPCEndpoint

LoggingRole: !GetAtt SFTPLoggingRole.Arn

Tags:

- Key: Environment

Value: !Ref Environment

- Key: Platform

Value: !Ref Platform

- Key: Function

Value: !Ref Function Creating a bucket is necessary if you plan to automate event triggers based on S3 actions:

SFTPS3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Sub ${Environment}-${Platform}-${Function}

AccessControl: Private

PublicAccessBlockConfiguration:

RestrictPublicBuckets: true

IgnorePublicAcls: true

BucketEncryption:

ServerSideEncryptionConfiguration:

- ServerSideEncryptionByDefault:

SSEAlgorithm: AES256

Tags:

- Key: Environment

Value: !Ref Environment

- Key: Platform

Value: !Ref Platform

- Key: Function

Value: !Ref Function

- Key: DataClassification

Value: Secure

It’s important to configure at least one test user to stand up alongside the server. This allows for fast and simple testing of your deployment. User setup can be a little tricky however, there are a number of different resources you’ll need to create and link together:

- An IAM Role

- A Managed Policy

- An SFTP User

The best practice is to create a fairly open and accessible Managed Policy then utilize the SFTP User’s (scopedown) Policy property to restrict access to only a user’s home directory:

TestUserRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Sub ${Environment}-${Platform}-${Function}-user

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service:

- "transfer.amazonaws.com"

Action:

- "sts:AssumeRole"

SFTPBucketPolicy:

Type: AWS::IAM::ManagedPolicy

Properties:

ManagedPolicyName: !Sub ${Environment}-${Platform}-${Function}-s3

Roles: [!Ref TestUserRole]

PolicyDocument:

Version: "2012-10-17"

Statement:

- Sid: AllowListingOfUserFolder

Action:

- s3:ListBucket

- s3:GetBucketLocation

Effect: Allow

# Soft Reference to the Bucket to get around circular dependency

Resource: !Sub arn:aws:s3:::${Environment}-${Platform}-${Function}*

- Sid: HomeDirObjectAccess

Effect: Allow

Action:

- s3:PutObject

- s3:GetObject

- s3:DeleteObjectVersion

- s3:DeleteObject

- s3:GetObjectVersion

# Soft Reference to the Bucket to get around circular dependency

Resource: !Sub arn:aws:s3:::${Environment}-${Platform}-${Function}*

TestUser:

Type: AWS::Transfer::User

Properties:

UserName: !Sub ${Environment}-${Platform}-${Function}-testuser

HomeDirectory: !Sub /${SFTPS3Bucket}/testuser

# This needs to be a json string

Policy: |

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowListingOfUserFolder",

"Action": [

"s3:ListBucket"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::${transfer:HomeBucket}"

],

"Condition": {

"StringLike": {

"s3:prefix": [

"${transfer:HomeFolder}/*",

"${transfer:HomeFolder}"

]

}

}

},

{

"Sid": "HomeDirObjectAccess",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObjectVersion",

"s3:DeleteObject",

"s3:GetObjectVersion",

"s3:GetObjectACL",

"s3:PutObjectACL"

],

"Resource": "arn:aws:s3:::${transfer:HomeDirectory}*"

}

]

}

Role: !GetAtt TestUserRole.Arn

ServerId: !GetAtt ServerlessSFTP.ServerId

# SshPublicKeys: REDACTED

Tags:

- Key: Environment

Value: !Ref Environment

- Key: Platform

Value: !Ref Platform

- Key: Function

Value: !Ref Function

Wiring up a Lambda function to trigger on S3 events is a standard use-case, however our first attempts created a few circular dependencies in the configuration in Cloudformation. The quickest solution was to use !Sub calls to build soft links between resources in the template (instead of !Ref).

WatchdogFunction:

# S3 Event Driven Lambda Function

# Notifies Quill when Customers Upload data to the SFTP

Type: AWS::Serverless::Function

Properties:

FunctionName: !Sub ${Environment}-${Platform}-${Function}-watchdog

Handler: lambda_handler.handler

Runtime: python3.6

CodeUri: ./src/

Description: >

Notifies Quill when customers upload new data to the SFTP

MemorySize: 1024

Timeout: 180

Environment:

Variables:

# Softlink to the Secret Name

SECRET_NAME: !Sub ${Environment}/${Platform}/${Function}/watchdog

# Softlinkk to the SFTPS3Bucket

CONFIG_BUCKET: !Sub ${Environment}-${Platform}-${Function}

CONFIG_KEY: watchdog.config

VpcConfig:

SecurityGroupIds: [!Ref WatchdogSecurityGroup]

SubnetIds:

- !If

- Production

- !FindInMap [ EnvironmentMap, "prod-quill", PrivateSubnet]

- !FindInMap [ EnvironmentMap, "nonprod-quill", PrivateSubnet]

Policies:

- AWSLambdaExecute # AWS Managed Policy

- !Ref WatchdogManagePolicy # Stack Managed Policy

- !Ref SFTPBucketPolicy # Stack Managed Policy

Events:

WatchdogS3Event:

Type: S3

Properties:

Bucket: !Ref SFTPS3Bucket

Events: s3:ObjectCreated:*

Tags:

Environment: !Ref Environment

Platform: !Ref Platform

Function: !Ref Function

Component: watchdog

Challenges

Price. Unfortunately, we discovered that this setup costs us slightly more to host than our previous solution, mostly because Amazon Transfer is a new service and Amazon typically charges more for new shiny things. However, S3, IAM, and Lambda combined cost almost nothing. We still believe that this project was worthwhile given that for a little more hosting cost, we have effectively zero engineering time required to maintain it.

Static IP Address. Many clients need to whitelist IP addresses egressing from their network. With Amazon Transfer, it does not use a static IP, nor has the ability to receive one. We would need to add some kind of proxy host like a Network Load Balancer. This would slightly bump up the price and increase the complexity a bit, but still ultimately be 100 percent serverless and low maintenance.

Conclusion

Thanks to the assistance of a few other intrepid engineers, we were able to complete this project in less than 2 working days. Given how critical this system is, it is remarkable how quickly we were able to do this. Amazon has made this extremely easy to set up.

We now have an easily reproducible, highly available, durable and secure SFTP server that requires zero time from engineering to maintain. The time saved in maintenance alone has made this worth it. In fact, the time it took us to complete this project was roughly the same amount of time we would spend per month just maintaining it.

One big thing that was pointed out to me after we did this was about the morale surrounding our infrastructure. The SFTP server does not drive new revenue and requires engineers to do regular maintenance. Given that it would crash unexpectedly for a variety of unrelated reasons, many engineers grew to dread dealing with this server.

Hackathons at Narrative Science are not always about prototyping new features or driving revenue. Sometimes we work on projects that eliminate that dread and reduce the maintenance overhead of our engineers. This ultimately frees us to focus less on maintenance and more on creating quality products for our customers.