Disaster Averted

I have been Technical Staff at TF2Maps.net for about a year as of writing this. One of the big things that bothered me when I first got onboard was how disorganized and chaotic the state of the infrastructure was. There wasn't a lot of it but it was old and fragile, and soon to be some serious hardware issues that needed to be addressed.

Unexpected hardware failures

In November of 2021, we discovered that our hardware was beginning to fail. We had been running a physical host out of a New York colo for nearly 8 years.

Usually disks and power supplies have a life of ~2-4 years, so its impressive we made it was far as we did, though scary because I was afraid our hardware could fail at basically any time.

In trying to allocate more disk to one of our VM's, our hypervisor Proxmox told us that it detected bad sectors. Super oh no. These disks we're RAID 0, meaning we had no actual redundancy.

Additionally, our colo contract expired in February of 2022, meaning we would need to move all of our systems over to something new with the quickness.

Lay of the land

The very first thing I did was assess the problem at hand and determine the scope of what we will do about it.

So the obvious question is, what needs to move?

I went onto every VM we had and took a thorough look to get an accounting of everything we would need to move or replace.

There was a lot more than I expected:

- Bind9 DNS

- Dovecot Email server

- Apache with 22 distinct virtual hosts

- Many of which were just static html sites

- One was the forum software

- One was our custom built map queue site

- The Discord Bot

- MySQL database

- Almost 1TB of static attachment data on disk

Bills Bills Bills

I also spoke with the incumbent system administrator about hosting cost; which I had found we were consistently spending more on hosting than we received in donations, and the difference came out of his pocket. Not great, and he defiantly was not happy with it.

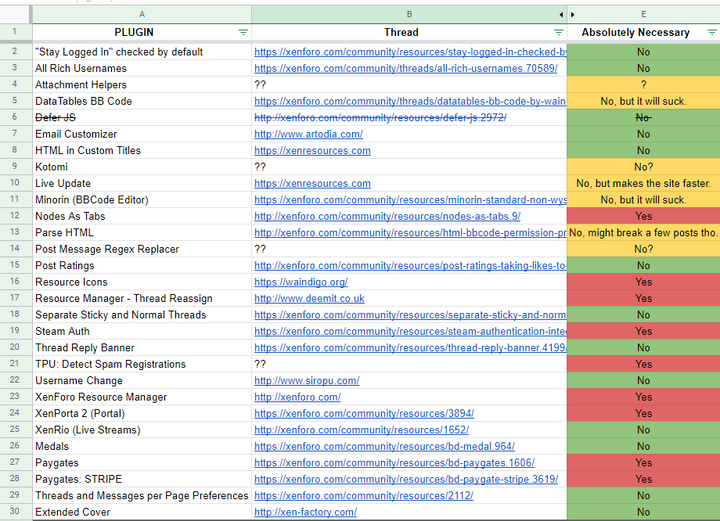

After some research, I determined that we would likely need to upgrade our version of the Xenforo forum software in order to manifest significant cost savings. So I tasked one of my "interns" with going into Xenforo and evaluate every existing plugin we ran on the old site, and asses it's criticality (must have, nice to have, dont need), as well as if we have a migration path for that plugin to Xenforo 2.

This research was critical to learning if this was even possible, or if we would need to look into other solutions.

Side note: Google Spreadsheets are great for this kind of ongoing collaboration:

Project Objectives

- Have fully operational systems moved to new hardware before our colo contract expired

- Reduce our hosting bill by at least 25%, but ideally as much as possible

- Lower the maintenance overhead for all systems going forward

- Bring us up to latest security best practices

Where to host it all?

So the first order of business was to see what our hosting options could be. At a glance I figured we could leverage a cloud provider to replace a good amount of functionality for us, as well as possibly use S3 to host a lot of stuff for very cheap.

I put together a cost estimate for...

AWS was cost prohibitive, though would have been the most feature rich one. OVH would mostly put us in a simliar situation as before.

So it was between DigitalOcean and Vultr. We ended up choosing Vultr because it had some slightly cheaper VM options and it has way more locations; which will be nice for ad-hoc game servers.

Bill reduction strategy

We had a lot of inefficiency in our hosting that we could fix.

First was all the static HTML sites we were serving. Vultr has an S3-type offering, so I assigned my "intern" the task of moving all of those sites into there. This will save us overhead on disk and also on just having less things to manage overall, since its setup and forget.

This was also a test for me to see how well their S3 setup works. The biggest hurdle was going to be figuring out if we can store our terabyte of attachment data out of S3 as well. A disk of that size for Vultr was going to be very expensive.

In researching this, I learned I could only do this if we upgraded our Xenforo software to 2+ (we were still running 1.4). Unfortunately there were no docs specifically for Vultr, but I was able to figure out how to get it to work, mostly by reading the source code of a few plugins. Xenforo 2 also allowed us to do a lot of things we had wanted to do for many years but were unable to because of XF 1 limitations.

Coupled with the cheap VM's that are offered by Vultr, and the use of S3 as a backend, as well as ad-hoc game servers; I believed we could significantly reduce our hosting cost this way.

The Prototypes

One was a clean install of Xenforo 2 on a fresh Vultr VM so we could see what we wanted to end state to be.

This prototype had no data in the database or attachments. It was done this way to make it faster to setup and reduce any conversion issues, and it was way cheaper. I wanted a clean reference setup. Using the research we did before; we installed all the plugins we believed would cover our needs, and then did a lot of testing to be certain.

We spent probably a month or so working on setting up the clean install and getting familiar with Xenforo 2. I also assigned my "intern" the task of setting up a UI theme to be reminiscent of our branding and old website, built off a more modern base. We quickly realized how many hacks the old site had in its CSS and theme so that was a fun adventure in CSS and HTML, something I haven't done much of in a long time.

The next prototype site I stood up was a clone of the existing site without attachments. It's purpose was to test the migration path from version 1.x to 2.x. Basically, what are we going to run into when we do this for real? The process was actually pretty smooth, though there were a bunch of non-critical things that didn't make it over cleanly, and we had to come back later and fix those up.

The Great Migration

We had started moving some items in January in preparation of the VM move:

- DNS served out of Vultr

- Email from a free email provider

- Static HTML sites

- Discord Bot

- Grafana

There was absolutely no way to do this without taking the site offline while data moved. Thankfully, nothing bad happens if TF2Maps is down for a few days. I wish it worked this way with production systems at work...

The VM move was a tense 3 days. If something in this process failed we may have lost a lot of data, so we needed to be very precise and methodical. Here was the precise steps, in which I was in constant communication with my intern and other "stakeholders":

- Create new (final) VM for the Xenforo site inside Vultr

- Put up a maintenance page to categorically prevent any DB writes from occurring

- Move the SQL databases onto the new VM, and save a backup in S3

- Attach a 1TB disk to the VM, and start the static file sync

- This ended up taking 3 days to complete

- Clone the Xenforo 1 site

- Perform the Xenforo upgrade

- Setup all the new plugins and theme

- Other Staff thoroughly inspect the site to knock out obvious issues first

- Create a thread on the forums for end users to report bugs

- Open it up to the public (Scary!)

The Post Migration Chaos

As usual with projects like this, we got dozens of bug reports and small issues.

To add to this, our first salvo was just to get us out of the colo expiration problem. We had not actually moved our attachments into S3, or created ad-hoc servers.

We would not be able to realize our cost savings until we had finally moved attachment data into S3. Again, this was easy because I already knew what to do because of the prototype phase we did. I had to once again take the site offline for a few days to do this. Once moved, I detached the disk, but kept it around as a hot backup incase something really went wrong with S3. After a week, I shutdown that disk, given it was quite expensive.

I assigned as many bugs to my intern as I felt he could reasonably handle while I tackled the more difficult ones. Together, we ended up fixing probably 50 issues over the course of a few weeks. These bugs were all over the place, and a lot of it was things we didn't purposely set or create. Needing to troubleshoot systems you're unfamiliar is uhhh, fun.

A major upgrade to security

Now I don't want to knock on the people who set things up before I came along because they did a good job of just keeping the ship floating, but lets just say the state of security was a bit, uhhh, nuanced.

Thankfully, I've done server hardening many many times before. Over the next few weeks I worked on setting up:

- Least privilege database users

- SSH key authentication only

- No root user logins

- SSH whitelisted to only administrators IP's

- Stateful firewall (fail2ban)

- Ulimits

- Apache virtual hosts run in discrete sandboxes

- Log rotate configs

- Auto-renewing wildcard SSL cert from LetsEncrypt

- Least privilege firewalls inside of Vultr

- Forced HTTPS redirection

Outcomes

- Data move completed with 3 days to spare!

- We did not lose any data whatsoever

- The steady state hosting cost was reduced by over 65%!

- Security is a lot better now

- Automation now exists for all the mundane things

- Automatic DB backups to S3 on a weekly cadance

- Automatic SSL renewal

- Xenforo -> Discord rank syncing

- The site is much better protected from disasters given most of the data is in S3 and the rest is regularly backed up

- Multiple people on Staff can now help me operate the site thanks to Xenforo 2 being a lot easier to administer.

Learnings

To be successful here, I had to wear a lot of different hats. I was basically the lead developer, project manager, ui designer, QA, security, and finance. I had a few people who were technical enough I could slice off some simple tasks and assign them to those people which did help a lot. Shout out to my intern!

The most difficult thing to do was cut through all the people wanting to shoot from the hip and get something out quick and also managing peoples expectations. Most of the people involved have not been a part of a project such as this before so I had to also wear the hat of the mentor. I had to guide everyone through a process which I had developed from doing migration projects like this at work. I have a systematic process to do this, but it was defiantly challenging getting folks onboard and to trust that I knew what I was doing. There was a great deal of skepticism that I would be able to actually pull this off, and to a degree, I myself was unsure if I would actually be able to deliver on some of the goals, mostly relating to cost optimization. The research step helped ease a lot of peoples concerns because I had proof that we would be able to actually pull this off.

The other thing to do was managing the expectations of our user base. We probably have about 1000 active users, so we got absolutely slammed with requests for a few weeks. My intern helped triage a lot of this stuff which was a nice burden off my shoulders, since I could focus on much bigger picture things. Of course every person feels the issue they are reporting is the most important one and you have to be patient and communicate that we acknowledge the issue and we are going to get to it when we can given its difficulty to fix and its value to the community as a whole while also reminding them that I am doing this without being paid at all. Some issues however, we probably just aren't going to fix because they're so difficult and low value. You have to accept that in projects like this, those things are going to happen.

Another thing I did here was to coach the rest of staff to act on my behalf and get in front of issues and snowballing discussions about the new site. I defiantly succeeded here in rallying dozens of people to assist me when I needed it. I think the key to this is being transparent and very clear about what you need from them and also modeling the behavior you want.

The Process in Bullet form

- Assess the scope of the problem at hand, i.e. what do we need to solve for?

- Research solutions

- Create prototypes to learn about a solution so you can pivot your research if needed

- Setup cost estimates

- Estimate the scope of work involved, and determine the must-haves

- Try to organize the work such that you can farm out to multiple people

- Prioritize work based on the constraints of the problem (such as our colo contract expiration)

- Action the minimum critical scope as your first salvo

- Openly seek feedback

- Iterate on improvements and bug fixes until completion

Don't forget to openly communicate to stakeholders about where you are in this process and be open about your unknowns. Seek to resolve those unknowns in your research as much as possible.